Synopis: This whitepaper delves into the ethical considerations surrounding artificial intelligence, emphasizing the importance of integrating moral principles into AI systems across various sectors. It examines core ethical principles such as fairness, transparency, accountability, and privacy, highlighting the challenges and potential harms that arise from issues like algorithmic bias and lack of transparency. The paper also analyzes real-world examples of ethical breaches in AI applications, underscoring the need for proactive measures to prevent discriminatory outcomes and ensure responsible AI development.

Furthermore, the whitepaper advocates for robust AI governance frameworks and multi-stakeholder collaboration to navigate these ethical complexities. It discusses the role of governance in establishing standards and oversight mechanisms to ensure AI technologies are used equitably and ethically. By promoting a collaborative approach among organizations, governments, researchers, and the public, the paper emphasizes the importance of creating an AI-driven future that prioritizes both innovation and justice.

Introduction: The

Imperative of Ethical AI in a Transforming World

Artificial

intelligence is rapidly evolving and becoming increasingly integrated into the

fabric of our lives, transforming industries, reshaping societal interactions,

and offering unprecedented potential for progress. From healthcare to finance,

criminal justice to creative arts, AI's influence is undeniable. However, this

transformative power brings with it a complex array of ethical dilemmas and

societal concerns. As AI systems become more sophisticated and autonomous,

questions surrounding fairness, transparency, accountability, and privacy have

moved to the forefront of public and policy discourse. Ensuring that AI is

developed and deployed responsibly is not merely an option but a critical

imperative for safeguarding human values and maximizing the benefits of this

powerful technology. This whitepaper aims to provide a comprehensive

understanding of ethical AI, explore the existing landscape of ethic codes and

guidelines, analyze real-world instances where ethical boundaries have been

breached, and investigate the crucial role of good AI governance in addressing

these challenges and fostering a future where AI serves humanity in a just and

equitable manner.

Defining Ethical AI:

Core Principles and the Evolving Landscape

Ethical AI, at its core, represents the application of moral principles and human values to the design, development, deployment, and use of artificial intelligence systems. It is a multidisciplinary field that seeks to ensure AI technologies are beneficial to society and do not inadvertently cause harm or perpetuate unfairness. Several definitions converge on this central idea, emphasizing adherence to well-defined ethical guidelines regarding fundamental values such as individual rights, privacy, non-discrimination, and non-manipulation. Ethical AI extends beyond mere legal compliance, setting policies that prioritize respect for fundamental human values and societal well-being. The aim is to mitigate unfair biases, remove barriers to accessibility, and augment human creativity, ensuring that AI acts as a force for good.

The foundation of ethical AI rests on several core principles, each crucial for ensuring responsible innovation. Fairness in AI signifies the equitable treatment of individuals or groups, avoiding unjust favoritism or discrimination. This principle is challenged by the potential for bias in training data, which can lead AI systems to perpetuate and even amplify existing societal inequalities. For instance, if training data disproportionately represents certain demographics, the AI model may exhibit bias against underrepresented groups. Transparency in AI refers to the ability to understand and justify how and why an AI system is developed, implemented, and used, making that information visible and understandable to people. This is closely linked to interpretability and explainability, which allow stakeholders to comprehend the decision-making processes of AI systems. Accountability in AI involves establishing clear lines of responsibility for the development, deployment, and consequences of AI systems, particularly when failures occur or harm is caused. This principle ensures that there is oversight and mechanisms for redress when AI systems behave unethically or cause unintended negative impacts. Finally, Privacy in AI emphasizes the need to respect user data rights, ensure the secure management of personal information, and comply with relevant privacy laws and regulations. Concerns around data collection practices, the necessity of obtaining informed consent, and the potential for misuse of personal data are central to this principle. These core principles are not isolated concepts but are deeply interconnected. Achieving fairness, for example, often requires transparency into the AI's decision-making to identify and address potential biases, while accountability necessitates clear understanding of the system's workings and data handling practices.

The field of AI ethics is not static; it is a dynamic and evolving domain that mirrors the rapid advancements in AI technology and our growing understanding of its societal implications.

Early ethical

discussions in AI centered on philosophical implications, such as the creation

of intelligent machines and their potential impact on human uniqueness. As AI

systems became more prevalent in real-world decision-making, the focus shifted

to issues of transparency, accountability, and the potential for algorithmic

bias, particularly in areas like facial recognition and criminal justice. The

rise of big data and machine learning algorithms in the 2010s intensified

concerns about privacy protection and the ethical use of personal information

in AI training and deployment. Contemporary AI ethics discussions now encompass

a wide range of emerging challenges, including the spread of misinformation

through AI-generated content, the creation and detection of deepfakes, and the

ethical implications of increasingly autonomous systems, such as self-driving

cars and military applications, as well as the long-term considerations

surrounding artificial general intelligence (AGI). There is a growing recognition

that existing legislation and regulation often provide insufficient protection

against potential harms arising from AI, leading to a proliferation of

principle-based ethics codes, guidelines, and frameworks from various

organizations and governments worldwide. This underscores the continuous need

for dialogue, adaptation, and proactive consideration of ethical implications

in the face of ever-evolving AI capabilities.

A Global Tapestry of

AI Ethics: Codes and Guidelines from Diverse Perspectives

The landscape of AI ethics is shaped by a multitude of codes and guidelines developed by a diverse range of organizations, governments, and research institutions across the globe. These frameworks reflect varying perspectives and priorities yet often converge on a set of core ethical principles aimed at guiding the responsible development and deployment of AI technologies.

Numerous organizations have taken the initiative to establish their own AI ethics guidelines. Technology companies, recognizing the profound impact of their innovations, have often been at the forefront of this effort. For instance, IBM has articulated three core principles: augmenting human intelligence, ensuring data and insights belong to their creator, and demanding transparency and explainability in AI systems. Their approach emphasizes fairness, robustness, and privacy as key pillars for the responsible adoption of AI. Microsoft's approach to AI ethics is guided by six key principles: accountability, inclusiveness, reliability and safety, fairness, transparency, and privacy and security. Bosch has outlined principles centered on beneficence, non-maleficence, human control, justice, and explicability. Meta, while not detailed in the provided snippets, also has publicly stated AI ethics guidelines. Beyond individual companies, industry consortia like the IEEE have developed comprehensive frameworks, such as the IEEE AI Ethics Framework, which focuses on human rights, well-being, accountability, transparency, and minimizing misuse. Non-profit organizations like AlgorithmWatch and the AI Now Institute also play a crucial role in researching and advocating for ethical AI practices. A common thread running through these diverse organizational codes is the emphasis on human benefit, fairness in algorithmic outcomes, transparency in AI operations, reliability and safety of AI systems, accountability for AI actions, and the protection of user data rights. Some guidelines also highlight the importance of establishing internal AI governance councils and implementing measures to protect sensitive data when utilizing AI tools.

Governments and international bodies are also increasingly active in shaping the ethical landscape of AI through guidelines and regulations. The United Nations Educational, Scientific and Cultural Organization (UNESCO) produced the first-ever global standard on AI ethics, the 'Recommendation on the Ethics of Artificial Intelligence,' which emphasizes the protection of human rights and dignity as its cornerstone. This recommendation is built upon four core values: human rights and human dignity, living in peaceful, just, and interconnected societies, ensuring diversity and inclusiveness, and environment and ecosystem flourishing. It also outlines ten core principles, including proportionality and do no harm, safety and security, right to privacy and data protection, multi-stakeholder and adaptive governance, responsibility and accountability, transparency and explainability, human oversight and determination, sustainability, awareness and literacy, and fairness and non-discrimination. The Intelligence Community in the United States has also established Principles of Artificial Intelligence Ethics, emphasizing respect for the law, transparency and accountability, objectivity and equity, human-centered development and use, security and resilience, and being informed by science and technology. The Organization for Economic Co-operation and Development (OECD) has developed AI Principles that promote innovative and trustworthy AI, respecting human rights and democratic values. These principles are values-based, focusing on inclusive growth, human rights (including fairness and privacy), transparency and explainability, robustness, security and safety, and accountability. Notably, several governments are moving towards formal regulation. The European Union's Artificial Intelligence Act (EU AI Act) is a pioneering regulatory framework that classifies AI systems based on their risk levels, imposing stringent requirements on high-risk applications and prohibiting certain unacceptable AI practices. In contrast, the United Kingdom has adopted a principles-based approach to AI regulation, tasking existing sectoral regulators with interpreting and applying five cross-sectoral principles: safety, security and robustness; appropriate transparency and explainability; fairness; accountability and governance; and contestability and redress. The United States has also issued guidelines, such as the NIST AI Risk Management Framework (AI RMF), which provides a voluntary framework for managing AI risks across the AI lifecycle through the functions of Govern, Map, Measure, and Manage.

Research institutions and universities also play a vital role in shaping ethical AI through the development of their own guidelines and frameworks, often tailored to the unique context of academic and research environments. These guidelines frequently emphasize principles such as the responsible exploration and evaluation of AI tools, ensuring transparency and accountability in their use, adopting a human-centered approach that prioritizes human judgment, upholding integrity and academic honesty in research and learning, promoting continuous learning and innovation in AI, ensuring accessibility and inclusivity of AI tools, and adhering to relevant legal and ethical compliance standards. Specific considerations within these frameworks often include data privacy and ownership in research, the quality and integrity of AI-generated content, expectations for proper publication and citation of AI in academic work, and the equitable and ethical use of AI tools in learning and research activities. Some institutions also provide specific guidance on the use of generative AI in educational and research settings, emphasizing the importance of accountability for AI-generated content, maintaining confidentiality of sensitive information, and ensuring proper attribution of AI's contributions. UNESCO has also developed AI competency frameworks specifically for students and teachers to promote a comprehensive understanding of AI's potential and risks in education.

A crucial aspect of developing effective AI ethics codes and guidelines is the inclusion of diverse perspectives and multi-stakeholder input. The development process should involve not only technical experts and ethicists but also policymakers, legal professionals, social scientists, and importantly, representatives from the communities that are likely to be affected by AI technologies. This diversity of thought is essential for avoiding inherent biases in the frameworks, improving the overall capabilities of AI systems by bringing in fresh perspectives, ensuring broader representation of user needs and values, and ultimately fostering the creation of more comprehensive and equitable ethical guidelines. Actively seeking and incorporating the viewpoints of marginalized and underrepresented groups is particularly important to prevent the amplification of existing societal inequalities through AI.

Multi-stakeholder collaboration, involving

governments, civil society organizations, and the private sector, is vital

for developing robust and adaptable AI governance frameworks that can

effectively address the complex ethical challenges posed by AI.

The absence of diverse perspectives in AI development and the formulation of ethical guidelines can lead to biased outcomes that do not adequately serve the needs of all members of society. Therefore, a concerted effort to ensure inclusivity in these processes is paramount for creating ethical AI that benefits humanity as a whole.

When AI Goes Astray:

Real-World Examples of Ethical Breaches and Their Impact

Despite the growing awareness of ethical considerations in AI, real-world examples of ethical breaches continue to emerge across various domains, highlighting the challenges in translating principles into practice and the potential for significant harm when AI goes astray.

In healthcare, several instances of AI ethics breaches have been documented. Algorithmic bias has been a major concern, with cases of AI models exhibiting unequal treatment based on skin tone or race in diagnosis and treatment recommendations. For example, an AI model investigated by New York regulators was found to prioritize patients based on skin tone, suggesting biased healthcare recommendations. Furthermore, concerns about patient privacy are paramount, given the vast amounts of sensitive health data used to train and deploy AI systems. Patients often share personal health information with the expectation that it will be used solely for their medical care, raising ethical questions about its use in AI algorithm training without explicit informed consent. The lack of transparency in how some AI algorithms make medical decisions also poses ethical challenges, affecting patient trust and the ability of healthcare professionals to evaluate treatment options. Determining accountability when AI systems make errors in healthcare remains a complex ethical and legal issue.

The finance sector has also witnessed its share of AI ethics breaches. Algorithmic bias in loan applications has been a significant concern, with studies indicating that AI-powered lending systems can perpetuate and even amplify existing societal biases, leading to discriminatory outcomes for minority groups. For instance, research has shown that mortgage algorithms may charge Black and Hispanic borrowers higher interest rates compared to White borrowers with similar creditworthiness. The lack of transparency in AI-driven credit scoring and financial risk assessments makes it difficult for individuals to understand why they were denied credit or offered unfavorable terms. Financial institutions handling vast amounts of sensitive data face increasing cybersecurity risks, and data breaches in AI-powered financial systems can have severe consequences for consumers. There are also ethical concerns about the potential for AI to be used for manipulative purposes, such as targeting individuals in precarious financial situations with unsuitable products.

In the realm of criminal justice, AI ethics breaches have raised serious concerns about fairness and equity. Algorithmic bias in predictive policing tools has been shown to disproportionately target minority communities, potentially leading to over-policing and reinforcing existing racial disparities. Risk assessment algorithms used in bail, sentencing, and parole decisions can also exhibit bias, potentially leading to unjust outcomes. The lack of transparency in the proprietary algorithms used in these systems makes it difficult to scrutinize their decision-making processes and identify potential biases. AI-powered facial recognition systems have been prone to errors and misidentification, particularly when identifying individuals from underrepresented groups, leading to wrongful arrests. The increasing use of AI in generating police reports also raises concerns about transparency, accuracy, and the potential to exacerbate existing biases in law enforcement.

Social media platforms have also faced numerous AI ethics breaches. Algorithmic bias in content moderation and targeted advertising can lead to discriminatory outcomes, affecting who sees what content and opportunities. Privacy violations are a persistent concern, given the vast amounts of personal data collected and used by AI algorithms to curate content and target advertisements. AI algorithms play a significant role in the proliferation of misinformation, hate speech, and other harmful content on social media, sometimes even targeting vulnerable users like children and teenagers with dangerous material. The personalized nature of algorithmic feeds can also contribute to the creation of echo chambers and filter bubbles, limiting users' exposure to diverse perspectives and potentially reinforcing extreme views.

Key Lessons Learned:

Guiding Principles for Successful AI Agents

The application of AI in creative fields, such as the generation of art in the style of renowned studios like Studio Ghibli, presents a unique set of ethical considerations. The emergence of "Ghibli AI," where AI models are used to create images and animations reminiscent of Studio Ghibli's iconic style, has sparked considerable debate within the artistic community and among fans. A central ethical question revolves around whether this mimicry constitutes plagiarism or copyright infringement, or if it can be considered a form of respectful homage. Many artists express concerns that AI tools are exploiting their hard-won styles without obtaining proper consent or providing fair compensation. Notably, Studio Ghibli's co-founder, Hayao Miyazaki, has been vocal in his disdain for AI-generated art, famously describing it as "an insult to life itself," indicating a strong ethical objection to its use in artistic creation.

The broader ethical implications of AI-generated art extend beyond specific cases like Ghibli AI. These implications encompass challenges related to copyright law, artistic integrity, and the potential impact on the livelihoods of human artists. The training of AI models often involves the use of vast datasets that include copyrighted artworks, raising questions about fair use and the rights of original creators. The ability of AI to mimic artistic styles with remarkable accuracy also challenges traditional notions of artistic integrity and the value placed on human creativity, prompting debates about originality and authenticity in the digital age. Furthermore, the increasing prevalence of AI-generated art raises concerns about its potential negative impact on the livelihoods of professional artists, possibly leading to a devaluation of human artistic talent and reduced job opportunities. The lack of transparency surrounding the data used to train AI art models and the need for proper attribution of AI's role in the creative process are also significant ethical considerations.

From a legal standpoint, the copyright of

AI-generated art remains a complex and evolving area. Current copyright law in

many jurisdictions, including the United States, typically requires human

authorship for a work to be eligible for copyright protection. This stance

raises questions about the ownership and legal protection of art created

primarily by AI algorithms. While the user who prompts the AI to create the

artwork may be considered to have some creative input, the AI system itself is

not recognized as a legal author capable of holding copyright. The use of

copyrighted source images or other materials as training data for AI models

also raises concerns about potential copyright infringement, although some

argue that this may fall under the umbrella of fair use. The extent to which AI

art transforms source materials versus closely imitating them is likely to be a

key factor in determining whether infringement has occurred. As the legal

landscape continues to adapt to the rapid advancements in AI art generation,

ongoing debates and potential lawsuits are expected to further clarify the

legal boundaries and ethical responsibilities in this creative frontier.

The Creative

Frontier: Examining AI Ethics in Artistic Applications

The application of AI in creative fields, such as the generation of art in the style of renowned studios like Studio Ghibli, presents a unique set of ethical considerations. The emergence of "Ghibli AI," where AI models are used to create images and animations reminiscent of Studio Ghibli's iconic style, has sparked considerable debate within the artistic community and among fans. A central ethical question revolves around whether this mimicry constitutes plagiarism or copyright infringement, or if it can be considered a form of respectful homage. Many artists express concerns that AI tools are exploiting their hard-won styles without obtaining proper consent or providing fair compensation. Notably, Studio Ghibli's co-founder, Hayao Miyazaki, has been vocal in his disdain for AI-generated art, famously describing it as "an insult to life itself," indicating a strong ethical objection to its use in artistic creation.

The broader ethical implications of AI-generated art extend beyond specific cases like Ghibli AI. These implications encompass challenges related to copyright law, artistic integrity, and the potential impact on the livelihoods of human artists. The training of AI models often involves the use of vast datasets that include copyrighted artworks, raising questions about fair use and the rights of original creators. The ability of AI to mimic artistic styles with remarkable accuracy also challenges traditional notions of artistic integrity and the value placed on human creativity, prompting debates about originality and authenticity in the digital age. Furthermore, the increasing prevalence of AI-generated art raises concerns about its potential negative impact on the livelihoods of professional artists, possibly leading to a devaluation of human artistic talent and reduced job opportunities. The lack of transparency surrounding the data used to train AI art models and the need for proper attribution of AI's role in the creative process are also significant ethical considerations.

From a legal standpoint, the copyright of

AI-generated art remains a complex and evolving area. Current copyright law in

many jurisdictions, including the United States, typically requires human

authorship for a work to be eligible for copyright protection. This stance

raises questions about the ownership and legal protection of art created

primarily by AI algorithms. While the user who prompts the AI to create the

artwork may be considered to have some creative input, the AI system itself is

not recognized as a legal author capable of holding copyright. The use of

copyrighted source images or other materials as training data for AI models

also raises concerns about potential copyright infringement, although some

argue that this may fall under the umbrella of fair use. The extent to which AI

art transforms source materials versus closely imitating them is likely to be a

key factor in determining whether infringement has occurred. As the legal

landscape continues to adapt to the rapid advancements in AI art generation,

ongoing debates and potential lawsuits are expected to further clarify the

legal boundaries and ethical responsibilities in this creative frontier.

Building a Foundation

for Trust: Exploring Good AI Governance

Good AI governance is essential for navigating the complex ethical challenges posed by artificial intelligence and for building a foundation of trust in these powerful technologies. AI governance encompasses the processes, standards, and guardrails that help ensure AI systems and tools are safe, ethical, reliable, and trustworthy throughout their lifecycle. Effective governance goes beyond mere compliance with legal requirements, aiming to align AI initiatives with broader ethical principles, societal values, and the strategic objectives of organizations. A key aspect of good AI governance is the establishment of robust oversight mechanisms to proactively address potential risks such as bias in algorithms, infringement of individual privacy, and the misuse of AI technologies, while simultaneously fostering innovation and building public trust.

Several key principles underpin effective AI governance. Fairness ensures that AI systems operate impartially and equitably, without propagating biases or discriminating against individuals or groups. Transparency and Explainability are crucial for making AI systems understandable and open to scrutiny, demystifying their decision-making processes and allowing stakeholders to comprehend how AI reaches its conclusions. Accountability establishes clear responsibilities for the actions and outcomes of AI systems, ensuring that individuals or teams can be held responsible when things go wrong. Privacy and Data Protection are essential for safeguarding individuals' personal information and ensuring that AI systems operate within legal and ethical boundaries related to data handling. Security and Robustness ensure that AI systems function reliably and are protected against cyber threats and adversarial attacks throughout their lifecycle. Human Oversight is another critical principle, emphasizing the need for human intervention and control, particularly in high-risk applications of AI, to ensure that AI systems augment rather than replace human judgment. Finally, Ethical Decision-Making involves establishing frameworks and processes that guide the ethical considerations and choices made during the development and deployment of AI systems.

To guide organizations in implementing these principles, a variety of AI governance frameworks and standards have emerged. The NIST AI Risk Management Framework (AI RMF) provides a structured approach to managing AI risks, emphasizing trustworthiness through the functions of Govern, Map, Measure, and Manage. The OECD AI Principles offer a set of value-based principles and recommendations for the responsible development and use of AI, focusing on areas like inclusive growth, human rights, transparency, robustness, and accountability. The ISO/IEC 42001 standard provides requirements for establishing, implementing, maintaining, and continually improving an Artificial Intelligence Management System (AIMS) within organizations, with a strong emphasis on risk management and the AI system lifecycle. The United Kingdom has adopted a non-statutory, principles-based framework for AI regulation, tasking existing sectoral regulators with interpreting and applying five core principles. In contrast, the EU AI Act takes a more regulatory approach, establishing a risk-based classification system for AI applications and imposing specific obligations and prohibitions. Organizations need to carefully evaluate these different frameworks to determine which one, or combination thereof, best aligns with their specific context, risk tolerance, and regulatory environment.

Implementing ethical AI governance within an organization can take various forms. Many companies are establishing AI ethics boards or committees composed of experts from technology, ethics, legal, and business domains to provide holistic oversight of AI initiatives and ensure alignment with ethical standards and societal expectations. Multi-level governance models often involve operational teams responsible for AI development and deployment, ethics committees providing guidance and arbitration on complex ethical dilemmas, and executive oversight ensuring that AI strategies align with organizational values and risk management policies. It is crucial to integrate AI governance into existing organizational processes, such as data governance and risk management frameworks, to ensure a comprehensive and coordinated approach. Given the rapidly evolving nature of AI technology, organizations should also adopt agile and adaptive governance models that can continuously evolve to accommodate new ethical challenges and technological advancements.

The Preventative

Power of Governance: Mitigating and Avoiding Ethical Breaches

Effective AI governance plays a crucial role in preventing and mitigating ethical breaches by providing a structured approach to addressing potential risks and ensuring responsible development and deployment of AI systems.

AI governance frameworks can significantly contribute to preventing bias and discrimination in AI systems. By emphasizing the importance of using diverse and representative training data, organizations can reduce the likelihood of AI models perpetuating existing societal biases. Implementing bias detection and mitigation techniques throughout the AI lifecycle, from data collection to model deployment, is another key strategy promoted by governance frameworks. Regular audits of AI systems for bias, particularly in sensitive applications, can help identify and rectify discriminatory outcomes before they cause harm. Furthermore, incorporating human oversight and intervention in critical decision-making processes involving AI can provide an essential safeguard against algorithmic bias, allowing for human judgment to identify and correct potentially unfair outcomes.

Ensuring transparency and explainability in AI systems is another area where good governance provides preventative power. AI governance frameworks often mandate the thorough documentation of AI system designs, the data used for training, and the decision-making processes employed by the algorithms. The adoption of Explainable AI (XAI) technologies and techniques, which aim to make the reasoning behind AI decisions more understandable to humans, is also encouraged within governance guidelines. Clear communication of the capabilities and limitations of AI systems to all relevant stakeholders, including users and the public, is also a crucial aspect of fostering transparency and building trust, which are often emphasized in governance frameworks.

Establishing clear mechanisms for accountability and responsibility is a cornerstone of effective AI governance in preventing ethical breaches. Governance frameworks often require organizations to define specific roles and responsibilities for individuals and teams involved in the various stages of the AI lifecycle, from design and development to deployment and monitoring. Implementing comprehensive audit trails that track the actions and decisions of AI systems can provide valuable insights in the event of failures or unintended consequences. Furthermore, governance policies should outline clear protocols and procedures for addressing any failures or harm caused by AI systems, ensuring that there are pathways for remediation and accountability.

Protecting data privacy and security is another critical area where AI governance plays a preventative role. Governance frameworks often mandate adherence to relevant data protection regulations, such as GDPR , and the implementation of robust secure data management practices throughout the AI system lifecycle. Obtaining informed consent from users regarding the collection and use of their data is frequently a key requirement within ethical AI governance policies. Additionally, governance frameworks emphasize the importance of implementing strong cybersecurity measures to safeguard AI systems and the sensitive data they process from unauthorized access, breaches, and malicious attacks.

Several case studies illustrate the positive impact of implementing AI governance frameworks. Microsoft's embedding of Responsible AI Principles into their product development lifecycle has helped them proactively address ethical considerations. IBM's holistic approach to AI ethics and governance, emphasizing trust and transparency, has fostered responsible innovation. A leading bank successfully avoided bias pitfalls in its AI-powered credit card approval system by deploying real-time AI monitoring and integrating AI governance early in the development process. An e-commerce giant improved its AI governance by implementing end-to-end data lineage, ensuring compliance with data privacy regulations and building greater customer trust. These examples demonstrate that a well-defined and effectively implemented AI governance framework can lead to tangible benefits, including increased trust among stakeholders, reduced risks of ethical breaches and regulatory non-compliance, and ultimately, improved business outcomes.

Visualizing the

Ethical Landscape: Statistics and Data on AI Ethics

The rapid proliferation of artificial intelligence across industries is evident in recent statistics. In 2024, a significant 72% of companies surveyed by McKinsey reported using AI in at least one area of their operations. This widespread adoption underscores AI's transformative potential. However, this rapid integration is accompanied by growing ethical concerns. A substantial 82% of respondents in a survey indicated that they care about the ethics of AI , and 23% of business leaders acknowledge concerns regarding the ethical implications of AI deployment. This juxtaposition highlights the urgent need for robust AI governance frameworks to address these rising ethical considerations.

Data consistently reveals the prevalence of bias within AI systems across various sectors. A meta-analysis of AI neuroimaging models for psychiatric diagnosis found that a concerning 83.1% exhibited a high risk of bias. In the realm of hiring, a study demonstrated that AI resume screening tools favored White male candidates in a staggering 85% of cases. The healthcare sector has also seen its share of bias, with research indicating that computer-aided diagnosis systems can return lower accuracy results for Black patients compared to White patients. These statistics underscore the reality that AI systems can perpetuate and even amplify existing societal biases, leading to unfair and discriminatory outcomes in critical areas of life.

Public trust in AI remains a significant factor influencing its widespread adoption and societal acceptance. Surveys indicate that 61% of individuals express wariness about trusting AI systems , and a substantial 86% believe that companies developing AI should be subject to regulation. However, research also suggests that transparency and data accuracy can significantly impact public trust, with a majority of workers indicating that accurate, complete, and secure data is critical to building trust in AI. Furthermore, 85% of respondents support a national effort to ensure AI safety and security.

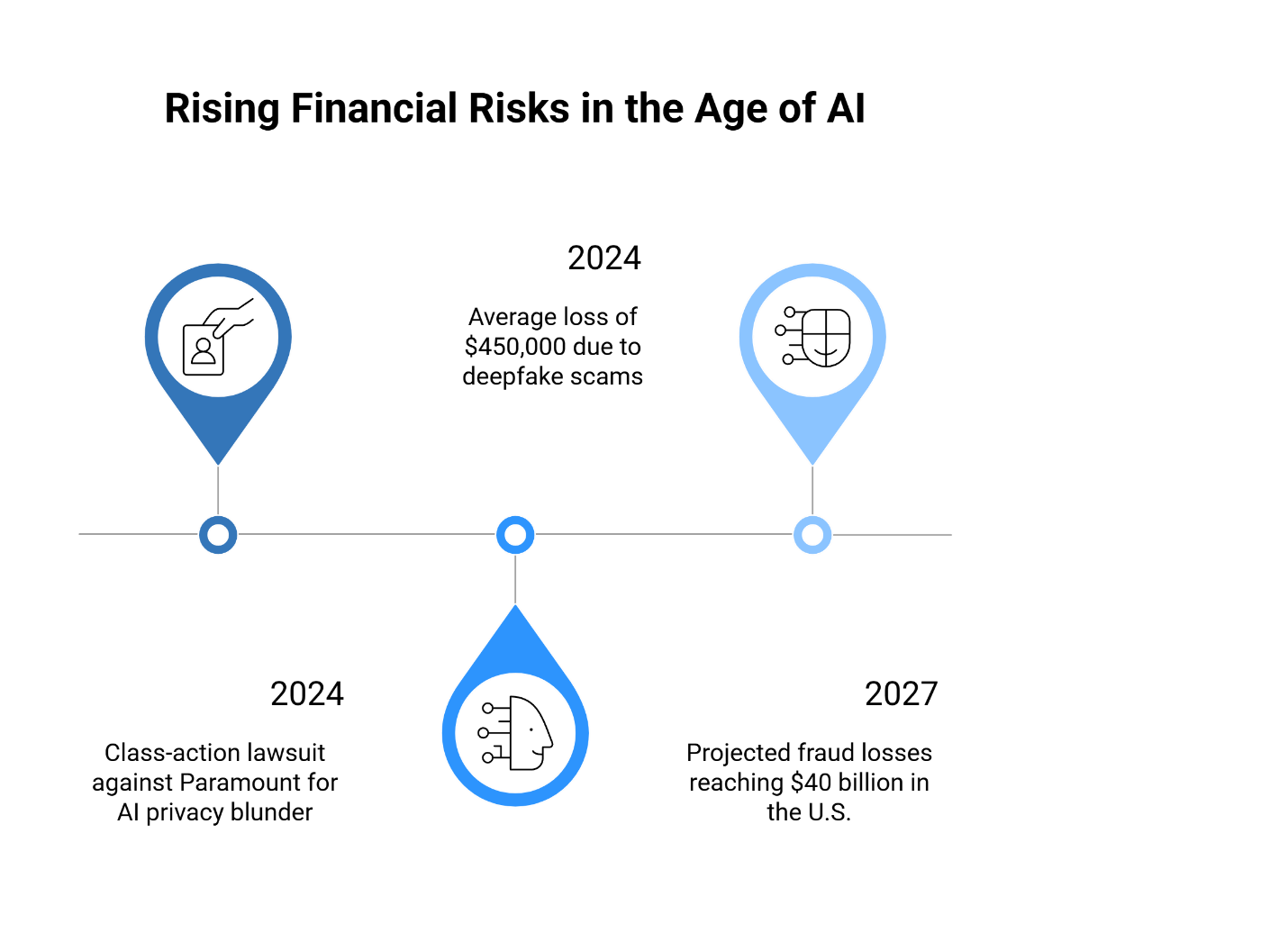

Ethical breaches in AI can have significant financial and reputational repercussions for organizations. A class-action lawsuit against Paramount for a privacy blunder related to AI resulted in a $5 million settlement. In 2024, businesses across industries reported an average loss of approximately $450,000 due to deepfake scams, with losses in the financial sector being even higher. Deloitte reports that AI-generated content contributed over $12 billion in fraud losses in the previous year, with projections reaching $40 billion in the U.S. by 2027. These figures underscore the tangible financial risks associated with neglecting ethical considerations in AI development and deployment.

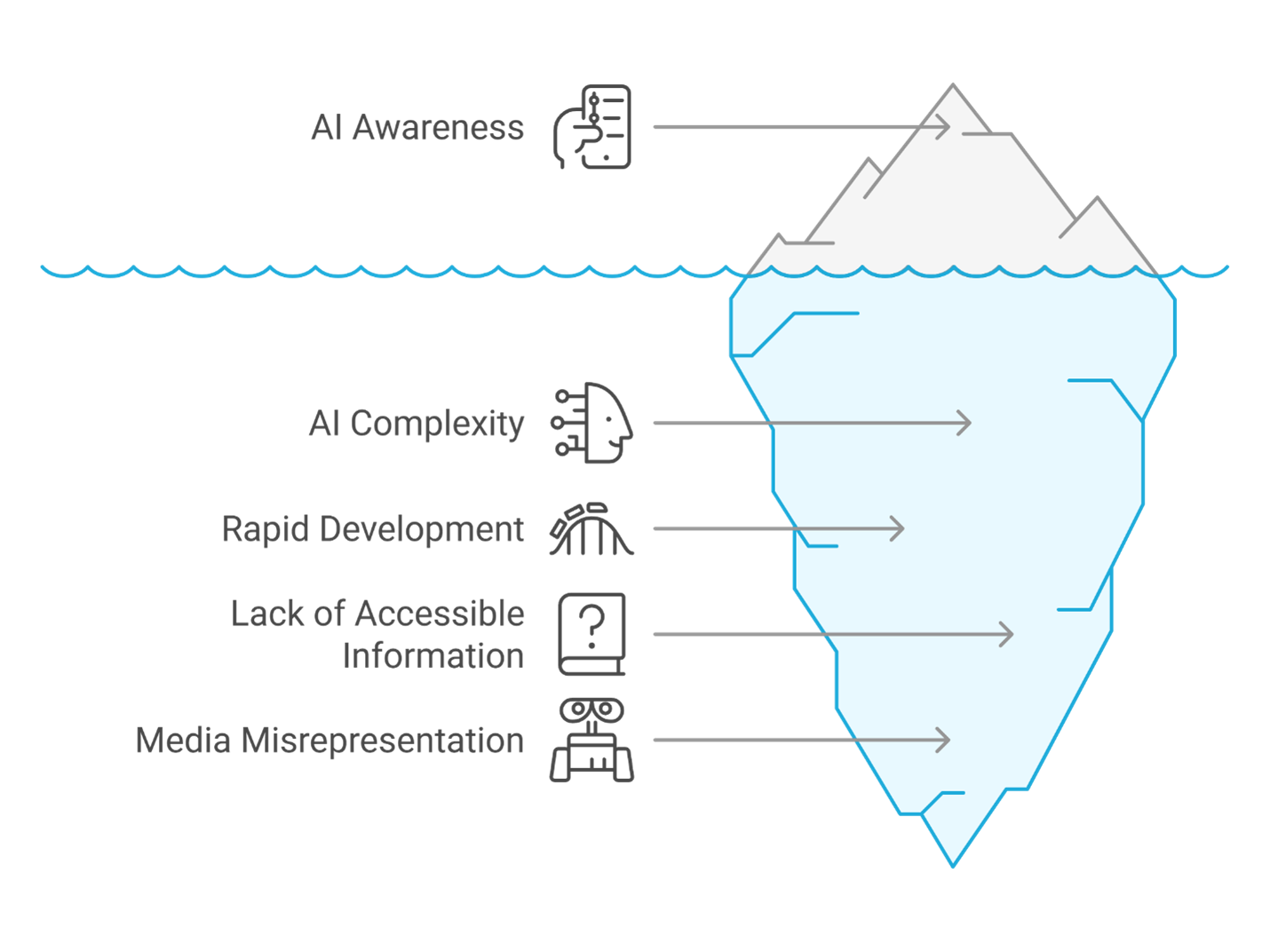

Surveys on public perception of AI ethics reveal a notable gap between expert discourse and public understanding. While 90% of Americans have heard about AI, only 33% report knowing much about the technology. A significant majority (70%) believe that companies developing AI technology should temporarily stop to allow time to consider its societal impact. Concerns about privacy, bias, accountability, and the potential for job displacement resonate strongly with the public. These findings highlight the need for improved communication and engagement strategies to bridge the gap between AI experts and the general public, fostering a more informed understanding of the ethical implications of AI.

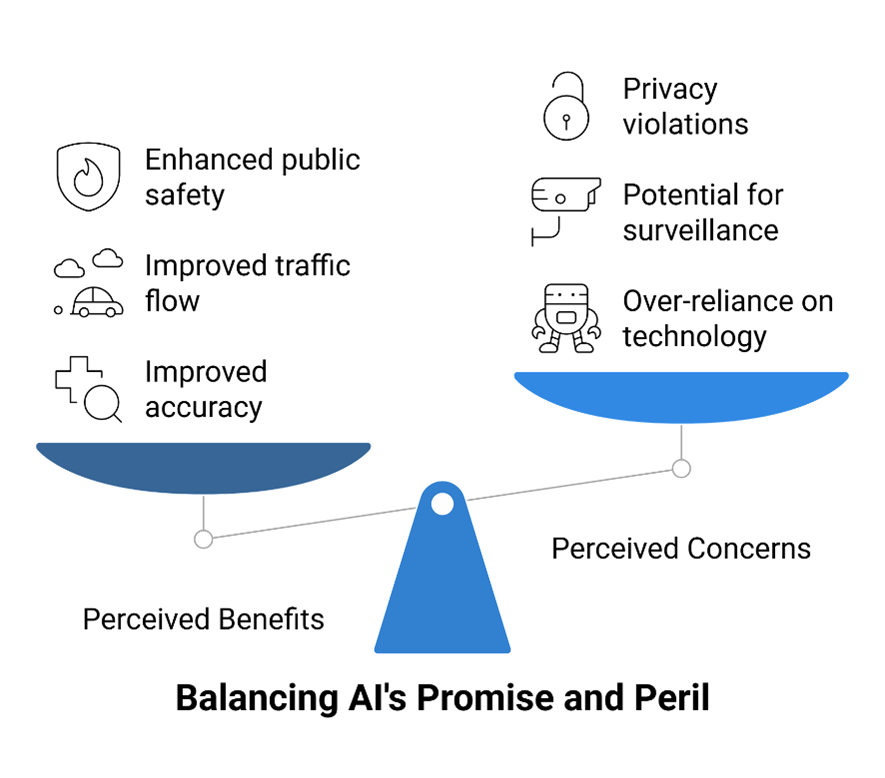

Public Perception of AI Benefits vs. Concerns for Select Applications

AI Application | Perceived Benefits | Perceived Concerns |

Healthcare Diagnosis | Improved accuracy, faster diagnosis, increased accessibility | Over-reliance on technology, lack of human judgment, difficulty in assigning responsibility |

Real-time Traffic Data Analysis | Improved traffic flow, reduced congestion | Potential for surveillance, privacy implications |

CCTV Security Monitoring | Enhanced public safety, crime prevention | Privacy violations, potential for misuse, algorithmic bias in identification |

Personalized Learning Materials | Tailored education, improved learning outcomes | Data privacy, potential for algorithmic bias, over-reliance on technology |

Autonomous Vehicles | Increased efficiency, reduced accidents (potential) | Safety risks, job displacement, ethical dilemmas in accident scenarios, lack of control |

Leading Voices in AI

Ethics: Citing Prominent Figures and Their Contributions

The field of AI ethics has been significantly shaped by the contributions of numerous prominent figures who have dedicated their work to understanding and addressing the complex ethical challenges posed by artificial intelligence. Their insights and research have been instrumental in raising awareness, shaping policy discussions, and developing frameworks for responsible AI development and deployment.

Fairness: Dr. Joy Buolamwini, a renowned researcher and advocate, has been at the forefront of highlighting algorithmic bias, particularly in facial recognition technology, and advocating for greater fairness and accountability in AI systems. Her work has demonstrated how biases in training data can lead to discriminatory outcomes, especially for marginalized groups. Dr. Timnit Gebru, another leading voice in the field, has also made significant contributions to understanding data bias and the importance of fairness metrics in evaluating AI models. Her research emphasizes the need for diverse and representative datasets and a critical examination of the societal impact of AI technologies. Cathy O'Neil, through her influential book "Weapons of Math Destruction," has brought the societal impact of algorithms to a wider audience, illustrating how opaque and biased algorithms can perpetuate and amplify inequality in various domains, including finance and criminal justice.

Transparency and Explainability: Dr. Cynthia Rudin is a strong advocate for interpretable machine learning, arguing that AI models should be transparent and understandable, especially in high-stakes applications. Her research focuses on developing models that are inherently interpretable rather than relying on post-hoc explanations. Researchers working on techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) have also made significant contributions by developing methods to provide insights into the decision-making processes of complex "black box" AI models, enhancing transparency and fostering trust.

Accountability and Governance: Professor Luciano Floridi, a leading scholar in the ethics of information, has extensively written about the ethical implications of AI and the need for robust governance frameworks. His work explores the philosophical underpinnings of AI ethics and provides valuable insights into the development of responsible AI policies. Organizations like NIST and the OECD, through their comprehensive AI risk management frameworks and principles, have also played a crucial role in shaping the discourse on accountability and governance in AI, providing practical guidance for organizations and policymakers.

Privacy and Data Protection: Dr. Shoshana Zuboff's groundbreaking work on "The Age of Surveillance Capitalism" has illuminated the ethical challenges posed by the widespread collection and use of personal data in the digital age, including its implications for AI development. Her analysis highlights the power imbalances created by the commodification of personal information and the urgent need for stronger privacy protections. Numerous experts in data privacy law and ethics continue to contribute to this critical area, working to develop legal frameworks and ethical guidelines that can safeguard individual privacy in an era of increasingly sophisticated AI technologies.

Conclusion: Charting

a Course Towards Responsible and Ethical AI Development and Deployment

The journey towards a future where artificial intelligence serves humanity ethically and equitably requires a concerted and sustained effort from a multitude of stakeholders. This whitepaper has explored the multifaceted landscape of ethical AI, from its foundational principles and the diverse codes and guidelines shaping its development, to the real-world consequences of ethical breaches and the critical role of good governance in mitigating these risks. The evolving nature of AI ethics necessitates a continuous process of learning, adaptation, and proactive engagement with emerging challenges.

The imperative of ethical AI development and deployment cannot be overstated. As AI becomes increasingly integrated into our lives, ensuring fairness, transparency, accountability, and privacy is paramount for building public trust and realizing the full potential of this transformative technology. The statistical data presented underscores the prevalence of bias in AI systems across various sectors and the significant financial and reputational risks associated with ethical breaches. Addressing these challenges requires a commitment to implementing robust AI governance frameworks that go beyond mere legal compliance and embed ethical considerations into every stage of the AI lifecycle.

A multi-stakeholder approach is essential for fostering responsible AI. Organizations must prioritize ethical principles in their AI strategies, investing in diverse teams, implementing bias detection and mitigation techniques, and ensuring transparency and accountability in their AI systems. Governments and regulatory bodies play a crucial role in establishing clear guidelines and regulations that promote ethical AI practices and protect fundamental rights. Research institutions have a responsibility to continue exploring the ethical implications of AI and developing innovative solutions to address emerging challenges. Finally, public awareness and engagement are vital for shaping the ethical trajectory of AI, ensuring that societal values and concerns are reflected in its development and deployment.

Charting a course towards responsible and ethical AI development and deployment is an ongoing endeavor. By prioritizing ethics in the AI revolution, fostering collaboration among diverse stakeholders, and embracing a continuous cycle of learning and adaptation, we can strive to create an AI-driven world that is both innovative and just, ultimately benefiting all of humanity.